# Docker Compose로 한 번에 배포하기

이 방법은 Docker Compose로 Airflow 컴포넌트들을 한 번에 배포하는 방법입니다. Docker로 컨테이너를 하나씩 배포하는 것보다 훨씬 편리합니다. 최종적으로 Docker 컨테이너를 통해 각 Airflow 컴포넌트들의 독립된 실행 환경을 구성하게 됩니다.

TIP

Docker Compose에 대해 처음 들어보시는 분이라면 [Docker] docker compose 사용법 (opens new window)를 읽어보시길 추천드립니다.

# 프로젝트 세팅

# 프로젝트 경로 생성 및 진입

Airflow 프로젝트를 담을 경로를 다음처럼 생성하고 진입합니다.

# Airflow 프로젝트를 위한 디렉토리를 하나 생성합니다.

$ mkdir my-airflow-project

$ cd my-airflow-project

1

2

3

2

3

이후에 진행될 모든 커맨드는 이 프로젝트 경로에서 실행합니다.

# docker-compose.yml 작성

다음처럼 docker-compose.yml을 작성합니다.

version: '3'

networks:

airflow:

driver: bridge

services:

airflow-database:

container_name: airflow-database

image: postgres:13

environment:

- POSTGRES_USER=airflow

- POSTGRES_PASSWORD=1234

volumes:

- ./data:/var/lib/postgresql/data

restart: always

networks:

- airflow

airflow-init:

container_name: airflow-init

depends_on:

- airflow-database

image: apache/airflow:2.2.3-python3.8

environment:

- AIRFLOW__CORE__SQL_ALCHEMY_CONN=postgresql+psycopg2://airflow:1234@airflow-database:5432/airflow

entrypoint: /bin/bash

command: |-

-c " \

airflow db init && \

airflow users create \

--username admin \

--password 1234 \

--firstname heumsi \

--lastname jeon \

--role Admin \

--email heumsi@naver.com \

"

restart: on-failure

networks:

- airflow

airflow-scheduler:

container_name: airflow-scheduler

depends_on:

- airflow-init

image: apache/airflow:2.2.3-python3.8

environment:

- AIRFLOW__CORE__SQL_ALCHEMY_CONN=postgresql+psycopg2://airflow:1234@airflow-database:5432/airflow

- AIRFLOW__CORE__EXECUTOR=LocalExecutor

command: airflow scheduler

restart: always

networks:

- airflow

airflow-webserver:

container_name: airflow-webserver

depends_on:

- airflow-init

image: apache/airflow:2.2.3-python3.8

environment:

- AIRFLOW__CORE__SQL_ALCHEMY_CONN=postgresql+psycopg2://airflow:1234@airflow-database:5432/airflow

- AIRFLOW__CORE__EXECUTOR=LocalExecutor

command: airflow webserver

ports:

- 8080:8080

restart: always

networks:

- airflow

airflow-code-server:

container_name: airflow-code-server

depends_on:

- airflow-init

image: codercom/code-server:4.0.2

environment:

- PASSWORD=1234

- HOST=0.0.0.0

- PORT=8888

volumes:

- ./dags:/home/coder/project

ports:

- 8888:8888

restart: always

networks:

- airflow

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

docker-compose.yml 내용을 각 컴포넌트 별로 살펴보면 다음과 같습니다.

# Docker Network

컨테이너간 사용할 네트워크를 구성합니다.

version: '3'

networks:

airflow:

driver: bridge

services:

airflow-database:

container_name: airflow-database

image: postgres:13

environment:

- POSTGRES_USER=airflow

- POSTGRES_PASSWORD=1234

volumes:

- ./data:/var/lib/postgresql/data

restart: always

networks:

- airflow

airflow-init:

container_name: airflow-init

depends_on:

- airflow-database

image: apache/airflow:2.2.3-python3.8

environment:

- AIRFLOW__CORE__SQL_ALCHEMY_CONN=postgresql+psycopg2://airflow:1234@airflow-database:5432/airflow

entrypoint: /bin/bash

command: |-

-c " \

airflow db init && \

airflow users create \

--username admin \

--password 1234 \

--firstname heumsi \

--lastname jeon \

--role Admin \

--email heumsi@naver.com \

"

restart: on-failure

networks:

- airflow

airflow-scheduler:

container_name: airflow-scheduler

depends_on:

- airflow-init

image: apache/airflow:2.2.3-python3.8

environment:

- AIRFLOW__CORE__SQL_ALCHEMY_CONN=postgresql+psycopg2://airflow:1234@airflow-database:5432/airflow

- AIRFLOW__CORE__EXECUTOR=LocalExecutor

command: airflow scheduler

restart: always

networks:

- airflow

airflow-webserver:

container_name: airflow-webserver

depends_on:

- airflow-init

image: apache/airflow:2.2.3-python3.8

environment:

- AIRFLOW__CORE__SQL_ALCHEMY_CONN=postgresql+psycopg2://airflow:1234@airflow-database:5432/airflow

- AIRFLOW__CORE__EXECUTOR=LocalExecutor

command: airflow webserver

ports:

- 8080:8080

restart: always

networks:

- airflow

airflow-code-server:

container_name: airflow-code-server

depends_on:

- airflow-init

image: codercom/code-server:4.0.2

environment:

- PASSWORD=1234

- HOST=0.0.0.0

- PORT=8888

volumes:

- ./dags:/home/coder/project

ports:

- 8888:8888

restart: always

networks:

- airflow

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

# Meta Database

Meta Database를 배포합니다. 초기화하는 부분도 포함합니다.

version: '3'

networks:

airflow:

driver: bridge

services:

airflow-database:

container_name: airflow-database

image: postgres:13

environment:

- POSTGRES_USER=airflow

- POSTGRES_PASSWORD=1234

volumes:

- ./data:/var/lib/postgresql/data

restart: always

networks:

- airflow

airflow-init:

container_name: airflow-init

depends_on:

- airflow-database

image: apache/airflow:2.2.3-python3.8

environment:

- AIRFLOW__CORE__SQL_ALCHEMY_CONN=postgresql+psycopg2://airflow:1234@airflow-database:5432/airflow

entrypoint: /bin/bash

command: |-

-c " \

airflow db init && \

airflow users create \

--username admin \

--password 1234 \

--firstname heumsi \

--lastname jeon \

--role Admin \

--email heumsi@naver.com \

"

restart: on-failure

networks:

- airflow

airflow-scheduler:

container_name: airflow-scheduler

depends_on:

- airflow-init

image: apache/airflow:2.2.3-python3.8

environment:

- AIRFLOW__CORE__SQL_ALCHEMY_CONN=postgresql+psycopg2://airflow:1234@airflow-database:5432/airflow

- AIRFLOW__CORE__EXECUTOR=LocalExecutor

command: airflow scheduler

restart: always

networks:

- airflow

airflow-webserver:

container_name: airflow-webserver

depends_on:

- airflow-init

image: apache/airflow:2.2.3-python3.8

environment:

- AIRFLOW__CORE__SQL_ALCHEMY_CONN=postgresql+psycopg2://airflow:1234@airflow-database:5432/airflow

- AIRFLOW__CORE__EXECUTOR=LocalExecutor

command: airflow webserver

ports:

- 8080:8080

restart: always

networks:

- airflow

airflow-code-server:

container_name: airflow-code-server

depends_on:

- airflow-init

image: codercom/code-server:4.0.2

environment:

- PASSWORD=1234

- HOST=0.0.0.0

- PORT=8888

volumes:

- ./dags:/home/coder/project

ports:

- 8888:8888

restart: always

networks:

- airflow

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

# Scheduler

Scheduler를 배포합니다.

version: '3'

networks:

airflow:

driver: bridge

services:

airflow-database:

container_name: airflow-database

image: postgres:13

environment:

- POSTGRES_USER=airflow

- POSTGRES_PASSWORD=1234

volumes:

- ./data:/var/lib/postgresql/data

restart: always

networks:

- airflow

airflow-init:

container_name: airflow-init

depends_on:

- airflow-database

image: apache/airflow:2.2.3-python3.8

environment:

- AIRFLOW__CORE__SQL_ALCHEMY_CONN=postgresql+psycopg2://airflow:1234@airflow-database:5432/airflow

entrypoint: /bin/bash

command: |-

-c " \

airflow db init && \

airflow users create \

--username admin \

--password 1234 \

--firstname heumsi \

--lastname jeon \

--role Admin \

--email heumsi@naver.com \

"

restart: on-failure

networks:

- airflow

airflow-scheduler:

container_name: airflow-scheduler

depends_on:

- airflow-init

image: apache/airflow:2.2.3-python3.8

environment:

- AIRFLOW__CORE__SQL_ALCHEMY_CONN=postgresql+psycopg2://airflow:1234@airflow-database:5432/airflow

- AIRFLOW__CORE__EXECUTOR=LocalExecutor

command: airflow scheduler

restart: always

networks:

- airflow

airflow-webserver:

container_name: airflow-webserver

depends_on:

- airflow-init

image: apache/airflow:2.2.3-python3.8

environment:

- AIRFLOW__CORE__SQL_ALCHEMY_CONN=postgresql+psycopg2://airflow:1234@airflow-database:5432/airflow

- AIRFLOW__CORE__EXECUTOR=LocalExecutor

command: airflow webserver

ports:

- 8080:8080

restart: always

networks:

- airflow

airflow-code-server:

container_name: airflow-code-server

depends_on:

- airflow-init

image: codercom/code-server:4.0.2

environment:

- PASSWORD=1234

- HOST=0.0.0.0

- PORT=8888

volumes:

- ./dags:/home/coder/project

ports:

- 8888:8888

restart: always

networks:

- airflow

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

# Webserver

Webserver를 배포합니다.

version: '3'

networks:

airflow:

driver: bridge

services:

airflow-database:

container_name: airflow-database

image: postgres:13

environment:

- POSTGRES_USER=airflow

- POSTGRES_PASSWORD=1234

volumes:

- ./data:/var/lib/postgresql/data

restart: always

networks:

- airflow

airflow-init:

container_name: airflow-init

depends_on:

- airflow-database

image: apache/airflow:2.2.3-python3.8

environment:

- AIRFLOW__CORE__SQL_ALCHEMY_CONN=postgresql+psycopg2://airflow:1234@airflow-database:5432/airflow

entrypoint: /bin/bash

command: |-

-c " \

airflow db init && \

airflow users create \

--username admin \

--password 1234 \

--firstname heumsi \

--lastname jeon \

--role Admin \

--email heumsi@naver.com \

"

restart: on-failure

networks:

- airflow

airflow-scheduler:

container_name: airflow-scheduler

depends_on:

- airflow-init

image: apache/airflow:2.2.3-python3.8

environment:

- AIRFLOW__CORE__SQL_ALCHEMY_CONN=postgresql+psycopg2://airflow:1234@airflow-database:5432/airflow

- AIRFLOW__CORE__EXECUTOR=LocalExecutor

command: airflow scheduler

restart: always

networks:

- airflow

airflow-webserver:

container_name: airflow-webserver

depends_on:

- airflow-init

image: apache/airflow:2.2.3-python3.8

environment:

- AIRFLOW__CORE__SQL_ALCHEMY_CONN=postgresql+psycopg2://airflow:1234@airflow-database:5432/airflow

- AIRFLOW__CORE__EXECUTOR=LocalExecutor

command: airflow webserver

ports:

- 8080:8080

restart: always

networks:

- airflow

airflow-code-server:

container_name: airflow-code-server

depends_on:

- airflow-init

image: codercom/code-server:4.0.2

environment:

- PASSWORD=1234

- HOST=0.0.0.0

- PORT=8888

volumes:

- ./dags:/home/coder/project

ports:

- 8888:8888

restart: always

networks:

- airflow

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

# Code Server

Code Server는

Code Server를 배포합니다.

version: '3'

networks:

airflow:

driver: bridge

services:

airflow-database:

container_name: airflow-database

image: postgres:13

environment:

- POSTGRES_USER=airflow

- POSTGRES_PASSWORD=1234

volumes:

- ./data:/var/lib/postgresql/data

restart: always

networks:

- airflow

airflow-init:

container_name: airflow-init

depends_on:

- airflow-database

image: apache/airflow:2.2.3-python3.8

environment:

- AIRFLOW__CORE__SQL_ALCHEMY_CONN=postgresql+psycopg2://airflow:1234@airflow-database:5432/airflow

entrypoint: /bin/bash

command: |-

-c " \

airflow db init && \

airflow users create \

--username admin \

--password 1234 \

--firstname heumsi \

--lastname jeon \

--role Admin \

--email heumsi@naver.com \

"

restart: on-failure

networks:

- airflow

airflow-scheduler:

container_name: airflow-scheduler

depends_on:

- airflow-init

image: apache/airflow:2.2.3-python3.8

environment:

- AIRFLOW__CORE__SQL_ALCHEMY_CONN=postgresql+psycopg2://airflow:1234@airflow-database:5432/airflow

- AIRFLOW__CORE__EXECUTOR=LocalExecutor

command: airflow scheduler

restart: always

networks:

- airflow

airflow-webserver:

container_name: airflow-webserver

depends_on:

- airflow-init

image: apache/airflow:2.2.3-python3.8

environment:

- AIRFLOW__CORE__SQL_ALCHEMY_CONN=postgresql+psycopg2://airflow:1234@airflow-database:5432/airflow

- AIRFLOW__CORE__EXECUTOR=LocalExecutor

command: airflow webserver

ports:

- 8080:8080

restart: always

networks:

- airflow

airflow-code-server:

container_name: airflow-code-server

depends_on:

- airflow-init

image: codercom/code-server:4.0.2

environment:

- PASSWORD=1234

- HOST=0.0.0.0

- PORT=8888

volumes:

- ./dags:/home/coder/project

ports:

- 8888:8888

restart: always

networks:

- airflow

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

# 배포

다음 명령어로 docker-compose.yml 를 실행합니다.

$ docker-compose up

1

컨테이너가 제대로 배포되었는지 다음처럼 확인할 수 있습니다.

$ docker-compose ps

Name Command State Ports

------------------------------------------------------------------------------------

airflow-code-server /usr/bin/entrypoint.sh --b ... Up 8080/tcp, 0.0.0.0:8888->8888/tcp

airflow-database docker-entrypoint.sh postgres Up 5432/tcp

airflow-init /bin/bash -c \ Exit 0

airflow ...

airflow-scheduler /usr/bin/dumb-init -- /ent ... Up 8080/tcp

airflow-webserver /usr/bin/dumb-init -- /ent ... Up 0.0.0.0:8080->8080/tcp

1

2

3

4

5

6

7

8

9

10

2

3

4

5

6

7

8

9

10

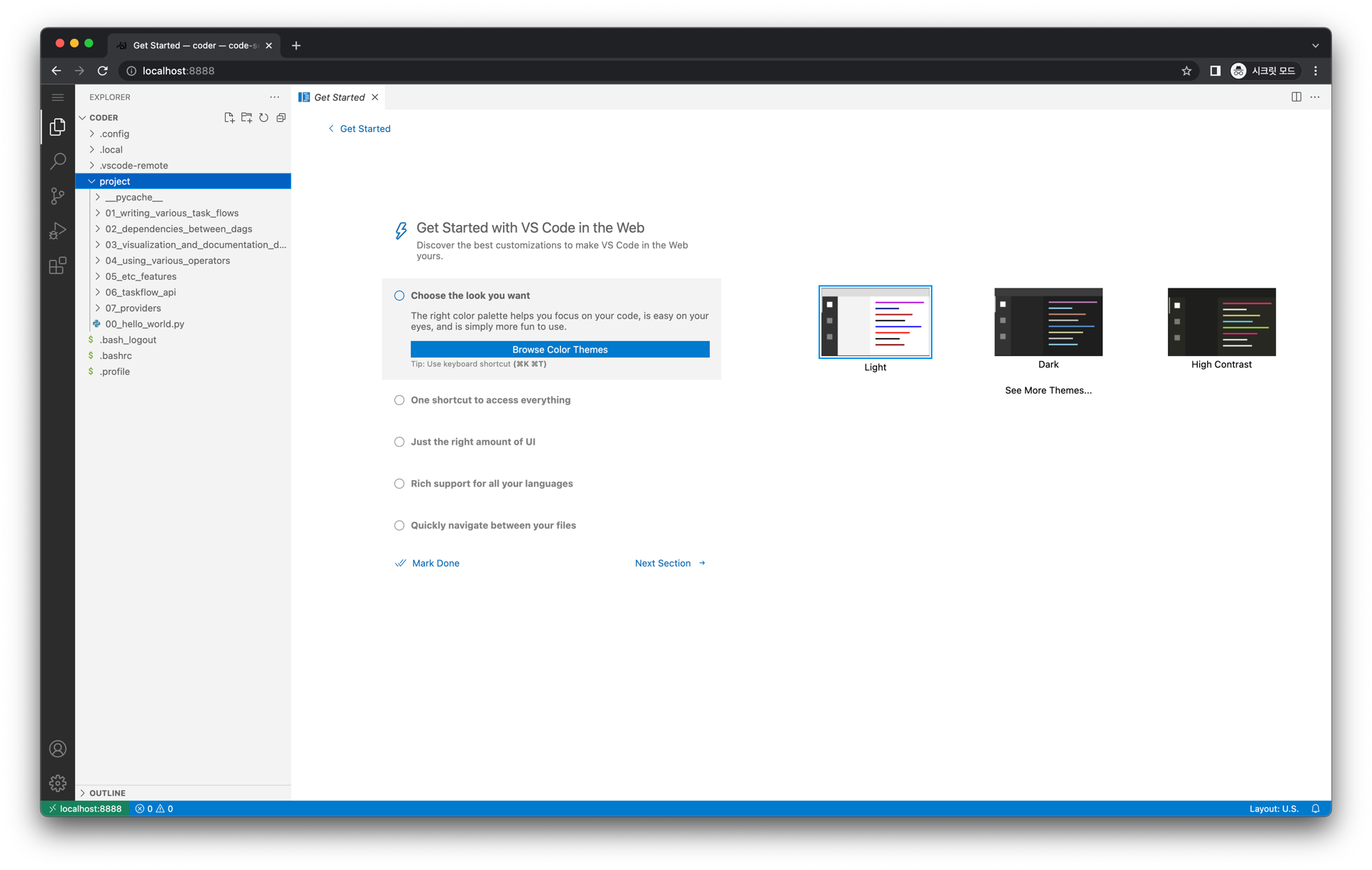

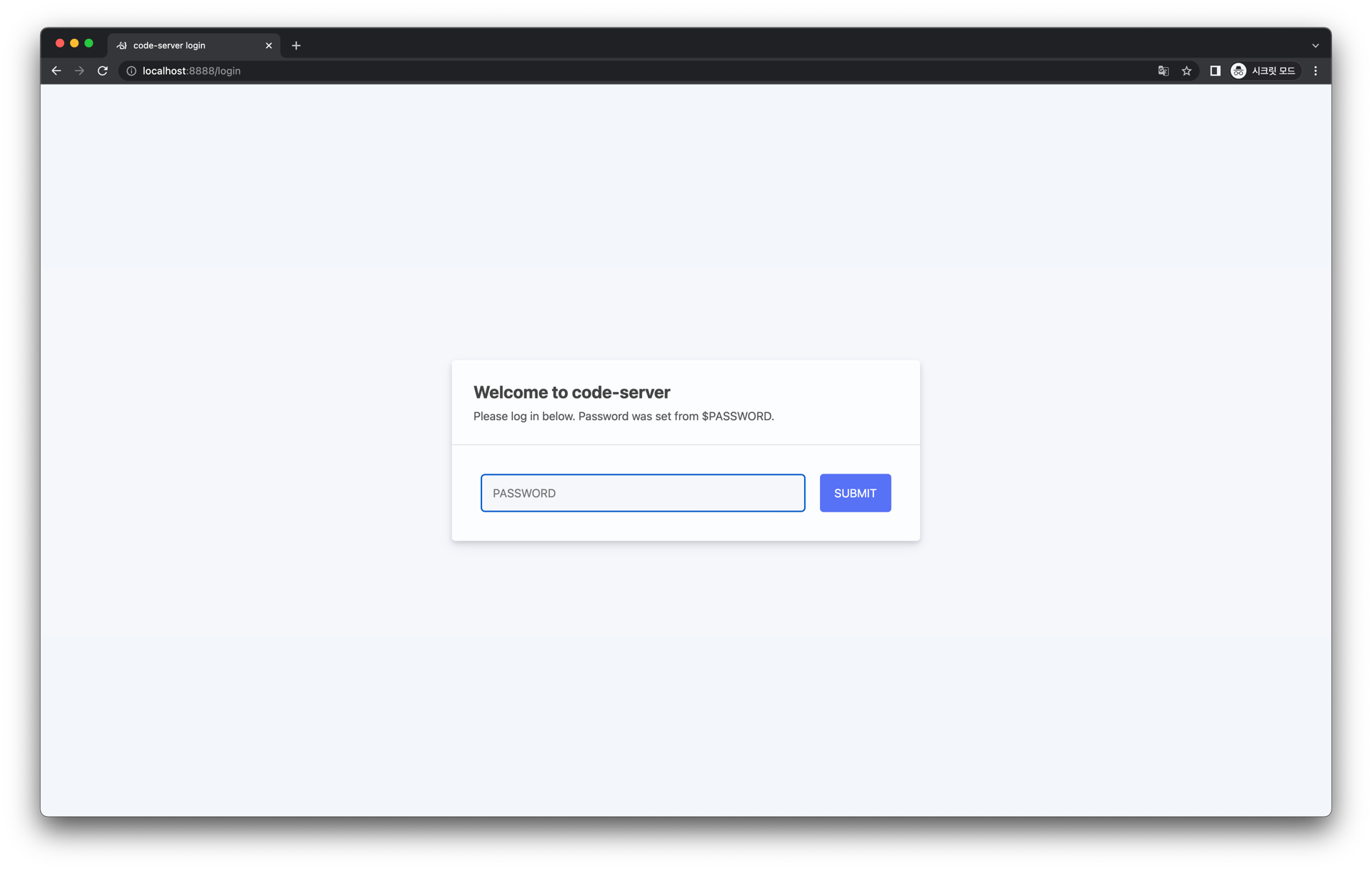

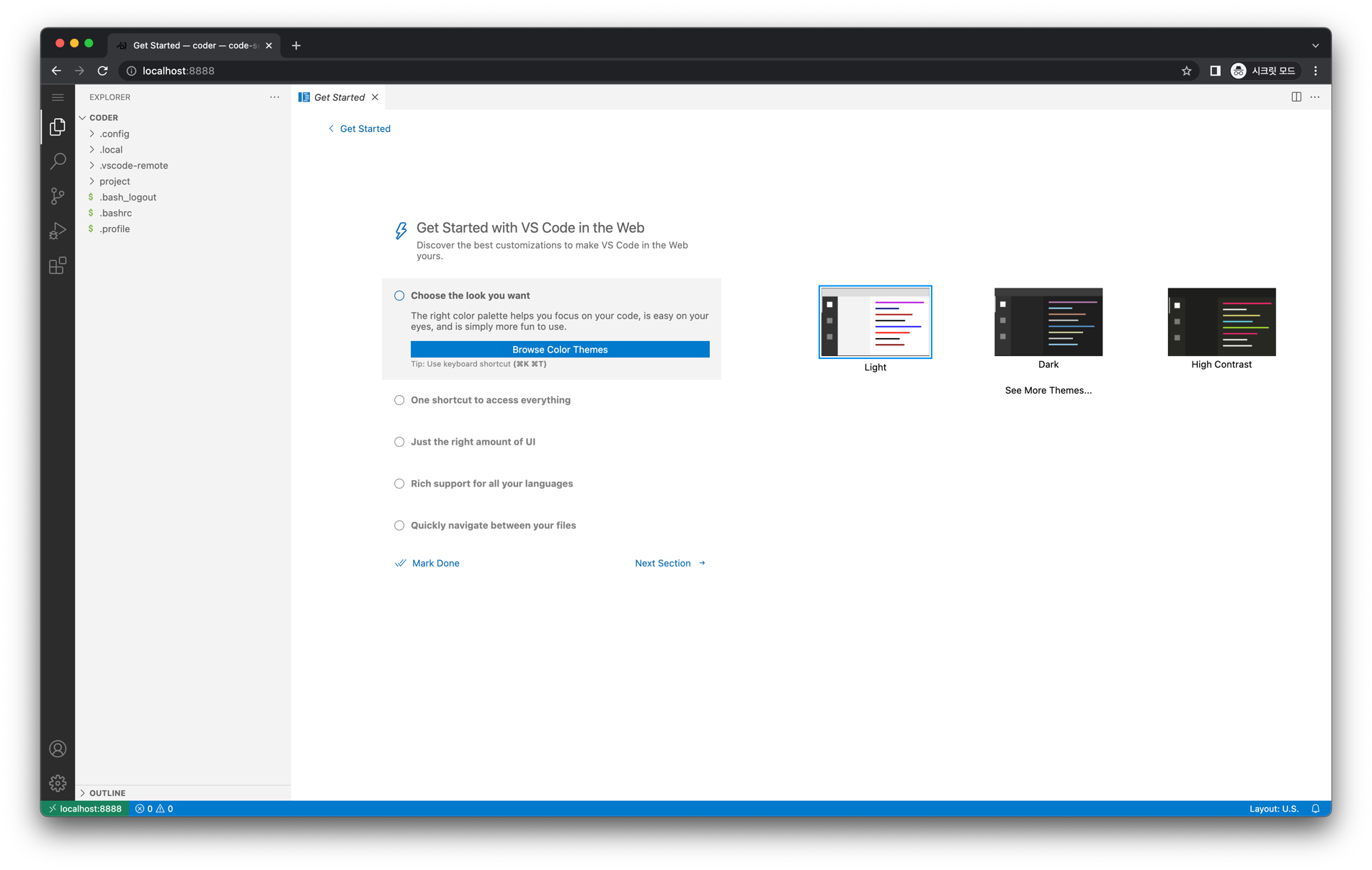

# Code Server

브라우저에서 http://localhost:8888 에 접속해봅시다.

배포할 때 설정한 비밀번호 1234 를 입력합니다. 그러면 아래와 같은 화면이 등장합니다.

왼쪽 Explorer 탭에서 project 를 클릭하면, 우리가 마운트한 dags/ 내 파일이 보입니다.